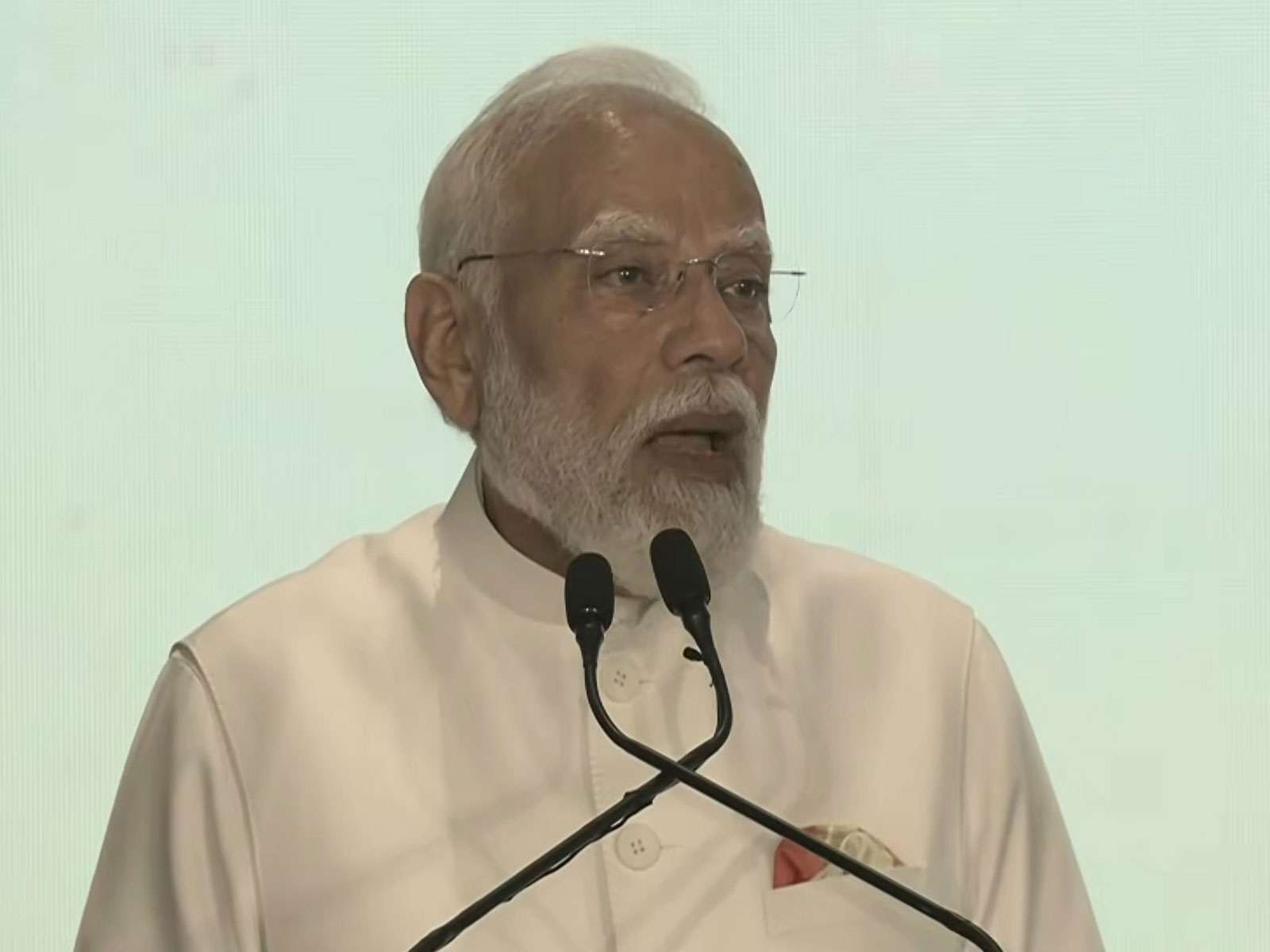

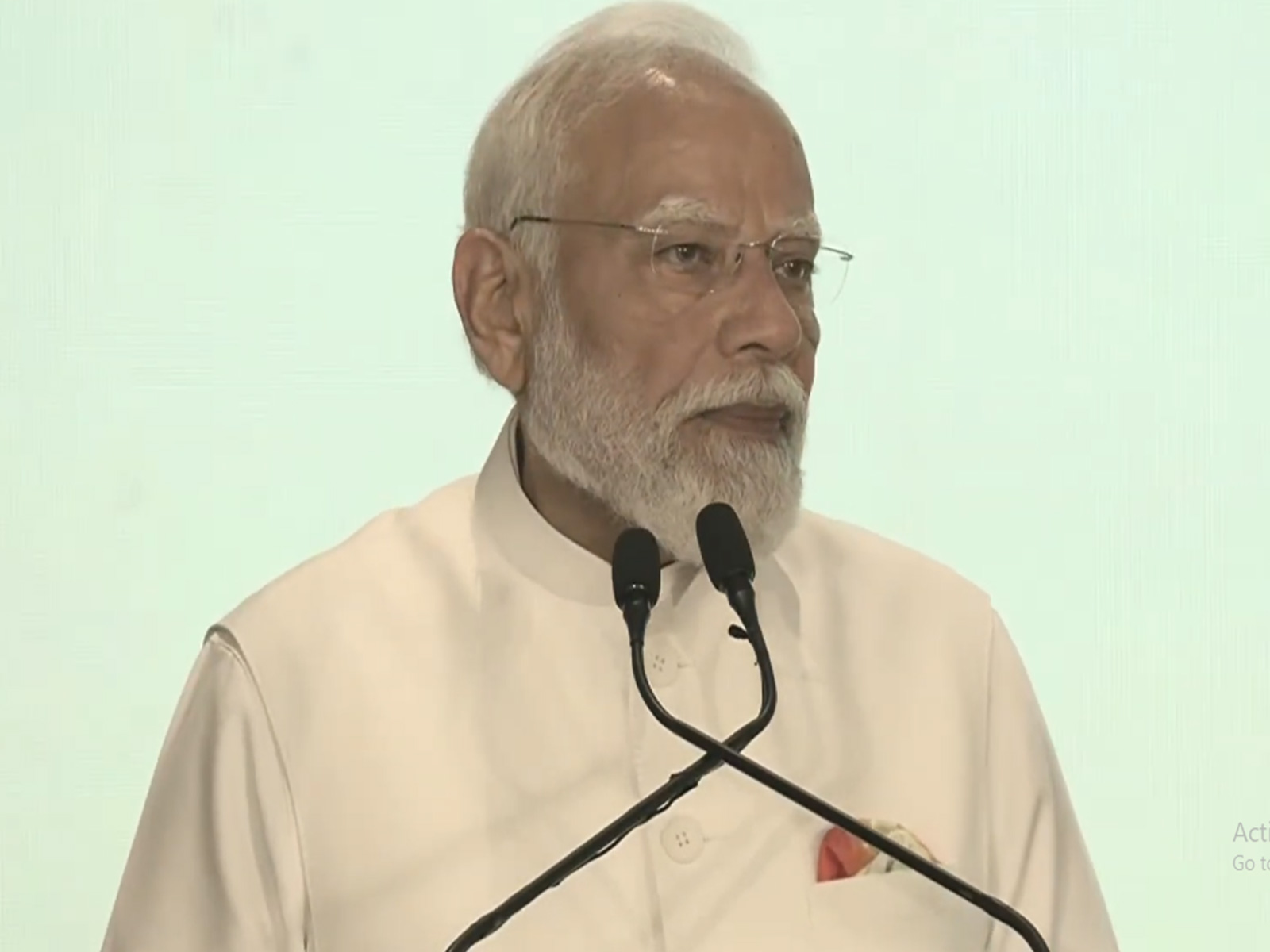

"IndiaAI Safety Institute is dedicated mechanism for ethical, safe, and responsible deployment of AI systems": PM Modi

Feb 17, 2026

New Delhi [India], February 17 : Prime Minister Narendra Modi highlighted concerns about the misuse of AI, including deepfakes and threats to vulnerable groups, and said India is strengthening its regulatory frameworks.

The measures include watermarking AI-generated content, enhancing data protection, and establishing the IndiaAI Safety Institute to promote ethical and responsible AI deployment.

The PM also said India is advocating a balanced approach to Artificial Intelligence that promotes innovation while building strong safeguards to prevent misuse, stressing the need for global cooperation and responsible governance to ensure safe and inclusive "AI for All."

In an interview with ANI, PM Modi said, "Technology is a powerful tool, but it is only a force-multiplier for human intent. It is up to us to ensure that it becomes a force for good. While AI may enhance human capabilities, the ultimate responsibility for decision-making must always remain with human beings. Around the world, societies are debating how AI should be used and governed. India is helping shape this conversation by showing that strong safeguards can coexist with continued innovation."

"India's commitment also extends globally. Just as there are global norms in aviation and shipping to ensure safety and accountability across borders, similarly, the world must work towards common principles and standards in AI. Whether through its role in the 2023 GPAI declaration, the Paris AI discussions, or in the current summit, India has consistently advocated a balanced path of advancing innovation while building safeguards for safe and inclusive #AIForAll," he said.

The Prime Minister also called for a global compact on Artificial Intelligence, stressing that strong safeguards, human oversight and transparency must go hand in hand with innovation to ensure AI is used responsibly and not misused for deepfakes, crime or terrorist activities.

"For this, we need a global compact on AI, built upon certain fundamental principles. These should include effective human oversight, safety-by-design, transparency and strict prohibitions on the use of AI for deepfakes, crime and terrorist activities. India is moving toward a more structured governance approach in AI regulation. With the launch of the IndiaAI Safety Institute in January 2025, the country created a dedicated mechanism to promote the ethical, safe, and responsible deployment of AI systems," PM Modi said.

The Prime Minister said that as Artificial Intelligence becomes more advanced, the responsibility to ensure its safe use must grow stronger.

"As AI becomes more advanced, our sense of responsibility must grow stronger. What makes India's approach distinctive is its focus on local risks and societal realities. The emerging risk assessment framework considers national security concerns as well as harms to vulnerable groups, including deepfakes targeting women, child safety risks, and threats affecting the elderly," he said.

"The urgency of these safeguards is becoming evident to everyone due to the surge in deepfake videos. In response, India notified rules requiring watermarking of AI-generated content and the removal of harmful synthetic media. Alongside content safeguards, the Digital Personal Data Protection Act strengthens data protection and user rights in the digital ecosystem," the Prime Minister said.