SK hynix reveals 48GB 16-layer HBM4 for high-efficiency AI at CES 2026

Jan 06, 2026

Seoul [South Korea], January 6 : SK hynix unveiled its next generation of artificial intelligence memory chips, including a 16-layer HBM4, at CES 2026 in Las Vegas on Tuesday. The chipmaker opened a customer-only exhibition booth to deepen engagement with key clients as the demand for high-performance memory continues to rise.

According to a report by The Korea Herald, this debut marks a significant step in the company's efforts to provide differentiated memory solutions for the evolving AI ecosystem.

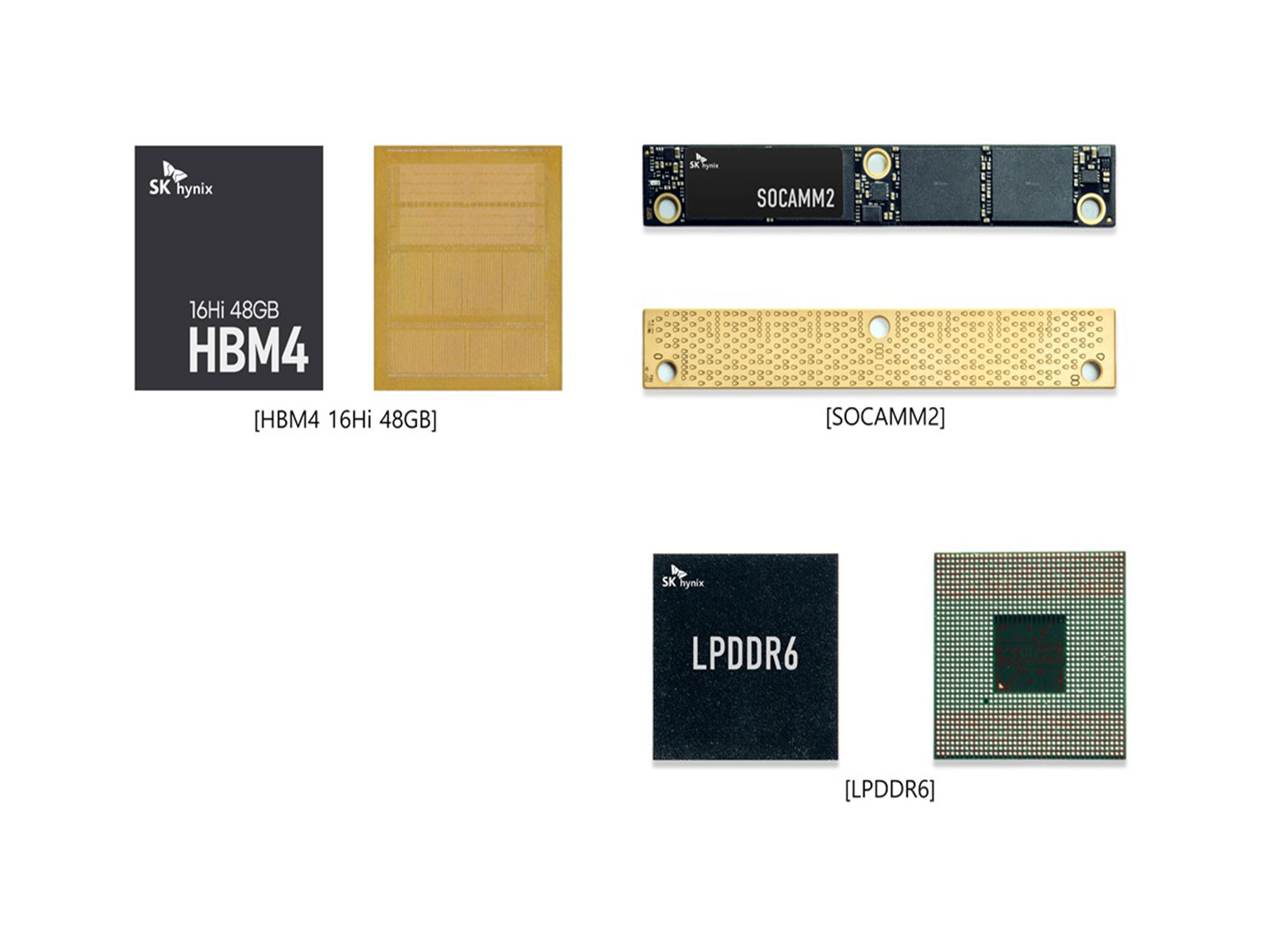

The 16-layer HBM4 model features a capacity of 48 gigabytes. This follows the development of the company's 12-layer HBM4 with 36GB, which previously recorded the industry's fastest speed of 11.7 gigabits per second. The company stated that the new 16-layer version is currently under development in line with specific customer roadmaps. This advancement aims to address the technical requirements of global technology firms seeking higher efficiency for AI-driven workloads.

"As innovation driven by AI accelerates, customers' technical requirements are evolving rapidly," the report quoted Kim Joo-sun, president and head of AI Infrastructure at SK hynix. "We will respond with differentiated memory solutions and create new value through close cooperation with customers to advance the AI ecosystem."

The exhibition also features the 12-layer HBM3E, a product SK hynix expects to lead the market throughout the current year. To demonstrate its role within complete AI systems, the company displayed GPU modules that incorporate these chips for AI servers.

An AI System Demo Zone was established to show how these memory solutions interconnect. This zone includes a large-scale mock-up of a Custom HBM (cHBM) module, which is optimized for specific AI chips or systems to improve overall performance.

"As competition in the AI market shifts from raw performance to inference efficiency and cost optimization, this design visualizes a new approach that integrates some computation and control functions into HBM -- functions previously handled by GPUs or ASICs," the report quoted the company.

Additionally, SK hynix showcased SOCAMM2, a low-power memory module for AI servers, and a 321-layer 2-terabit QLC NAND flash product designed for ultra-high-capacity enterprise SSDs.